In 2017 I was involved in an important work project to transfer all the records in a legacy system that was being deprecated by the vendor (IDOX Group) into another maintained system. We were in some ways fortunate because both systems had been designed by a single company (IDOX) and they were encouraging us to transfer. We had delayed transfer for several years already but were aware that we now had to move. The vendor did have some tools in place , had staff dedicated to such transfers and were offering favourable consultancy rates. The amount of data was not horrendous in computing terms but they were far far beyond the remit of the ability to cope with any sort of manual data correction and the system was an absolute core system upon which several departments completely depended. These were systems that all departments are in from the moment they start the work day to the end. Generally its unusual if they are down for more than 5 minutes in a month, all work pretty much stops when they stop and in no circumstances could they be down for more than a day without special dispensation and coordination to indicate to manage customer expectations.

The whole project was a success although it was challenging. Here is an outline of the steps we took. As ever order here is important in most of the steps. I had written something on this before but consider this to be a more accurate rundown see here

Step 1

Inform managers of all involved sections and ensure they are on board – identify and ring fence budget

Step 2

Appoint project manager on vendor and client side

draw together team to perform transformation.

Step 3

Draft time table creation of how long it will take putting in place planning for tutorials on systems and consultancy.

Step 4

Request managers to put forward staff on all sides willing to be involved

Step 5

Identify any omissions in knowledge and start to identify how this can be remedied. Kick off and complete acquisition of said staff.

Step 6

Meeting with lead staff to confirm buy in. Request buy in from staff including ring fencing of holidays etc.. to ensure key staff are available at required times.

Step 7

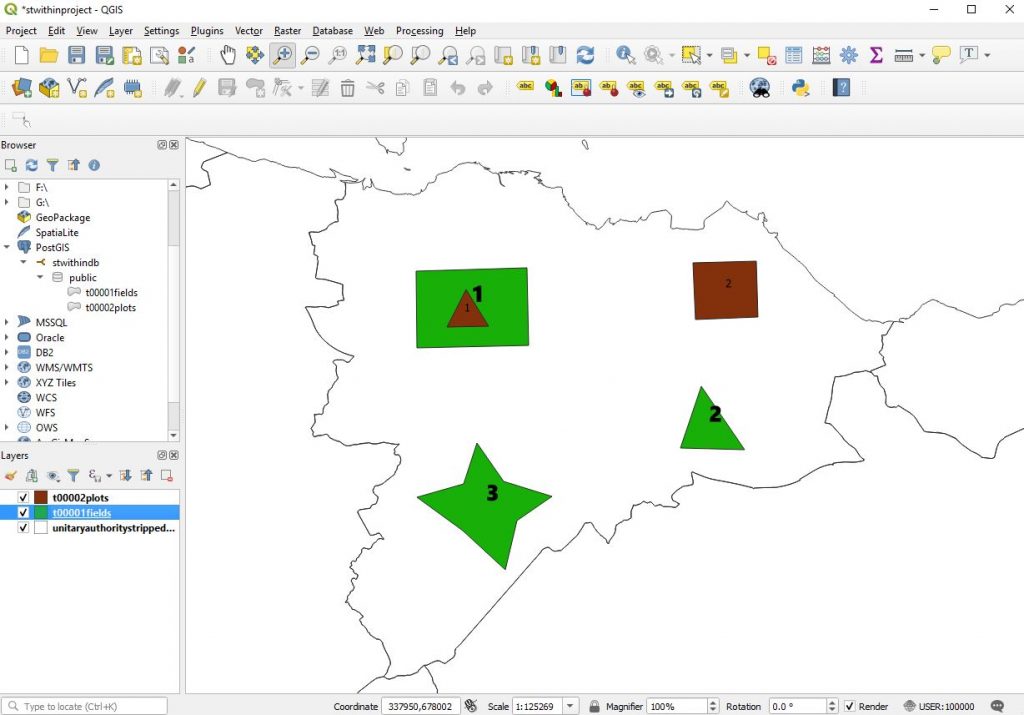

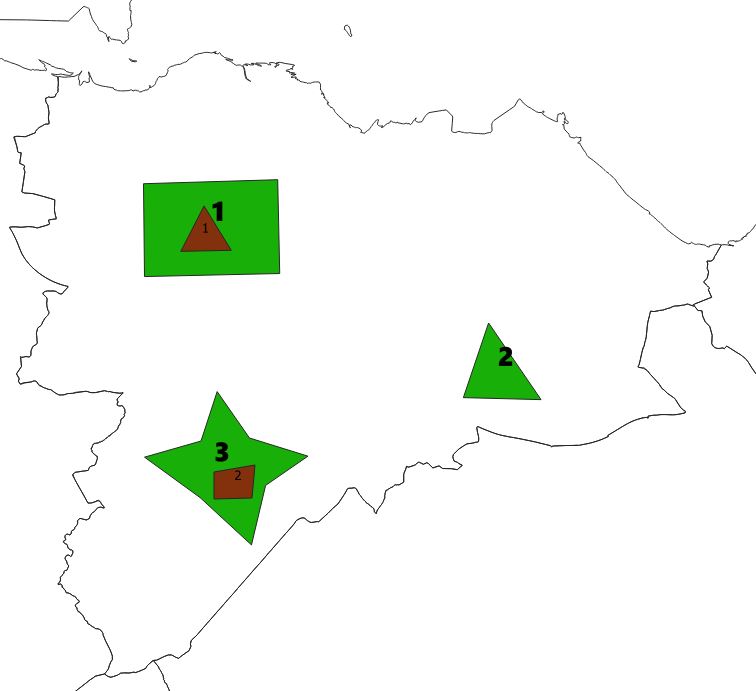

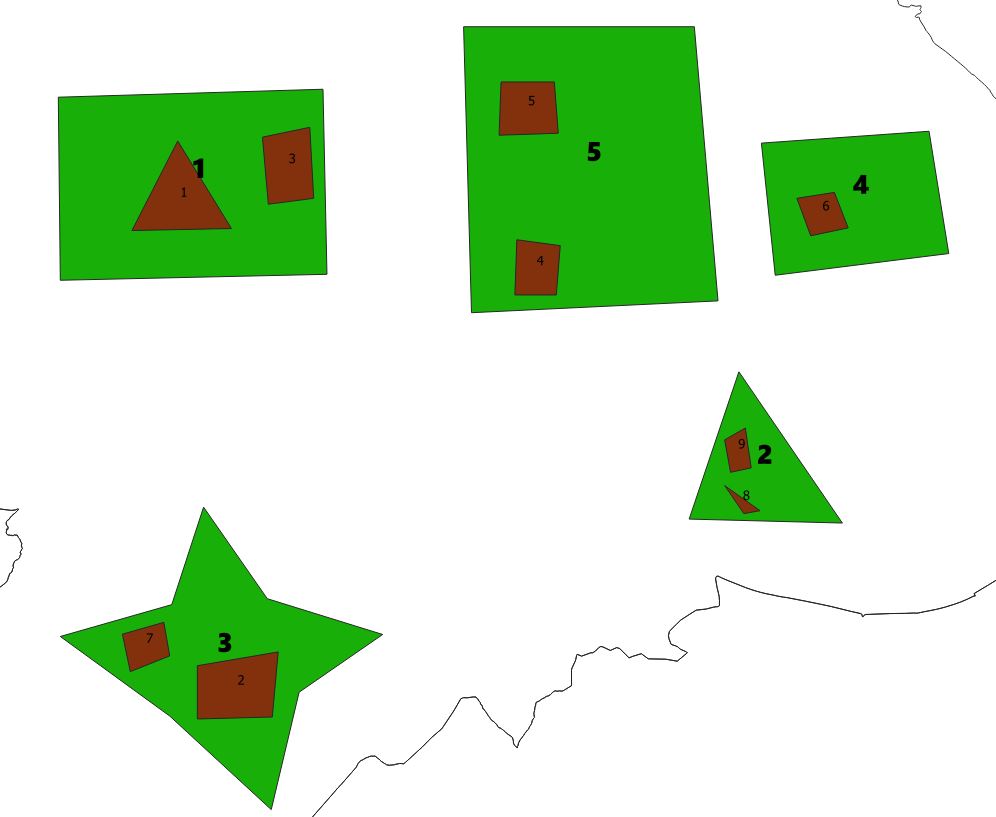

Set up test systems that all individuals have access to and ensure that the old and new systems can be viewed simultaneously by individuals. Ensure that the domain specialists can identify processes that will be mirrored from the old system to the new system

Step 8

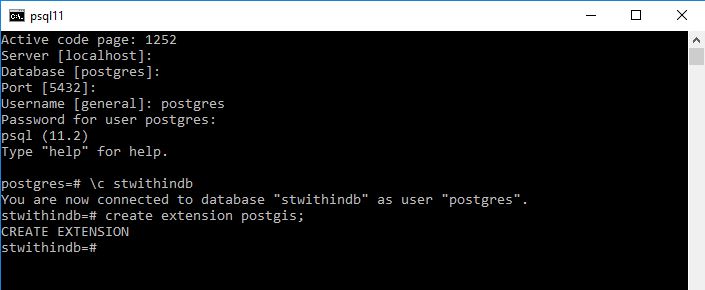

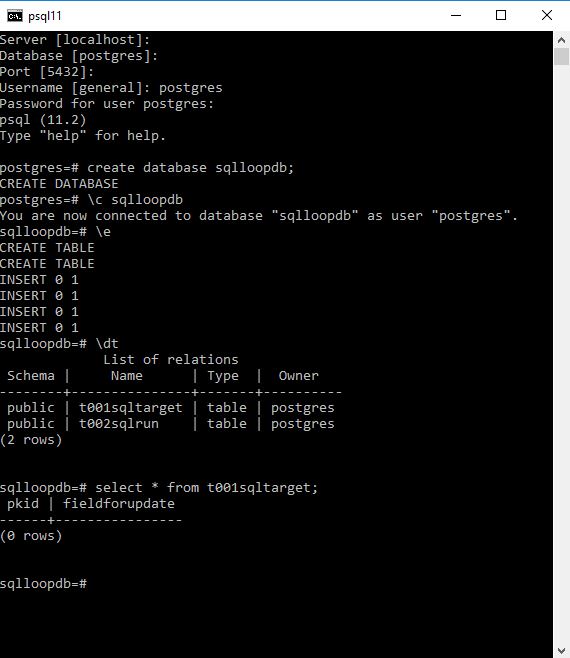

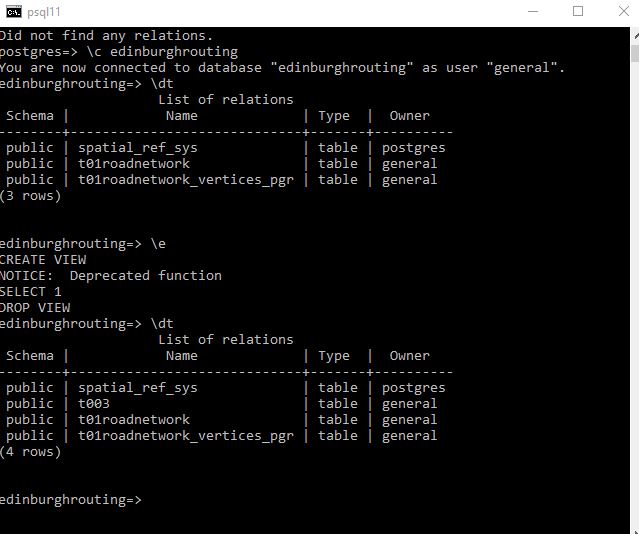

Give DBAs or those that will be doing data transfer access to databases of source so that they can start thinking of how they can pull out information.

Step 9

Training for all individuals concerned in new systems.

Step 10

In new system start tasking individuals with how they are going to do the simple processes – eg register a record approve a record alter a record and get reports out. If possible allow new champions to start to define things like reports.

Step 11

Start making up any new lookup fields compared with old lookups and also start tasking individuals with creation of reports and letter that will need to be done.

Step 12

Start mapping the data from old system to new system – excel spreadsheets can be used for this that show the data going from the old system and what fields they are going to go into in the new system. Divide this task up between domain users – this step needs to be done after old and new systems are on domain users machines. As part of this the applications in question should expose if possible the table and field names of the source and target fields. With the systems we were involved in this was possible both for the old and new systems.

For each form on the two systems try to identify the below

Source table.field Target table.field

Also get them to map the lookup table values if direct transfer is not possible or if alias id are used in these lookups.

Source table.field.value=Equivalent.Target table.field.value

Step 13

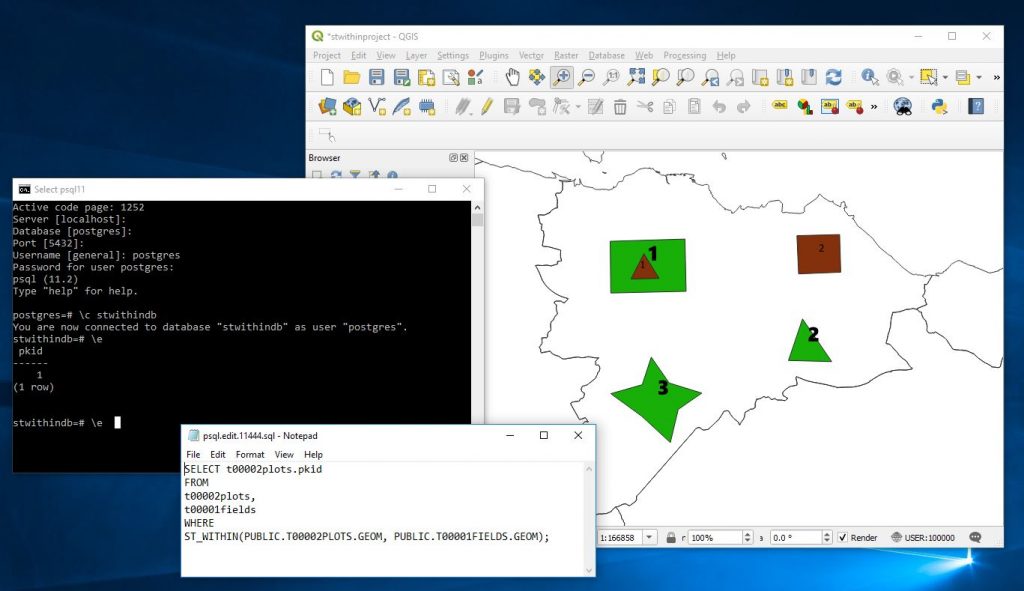

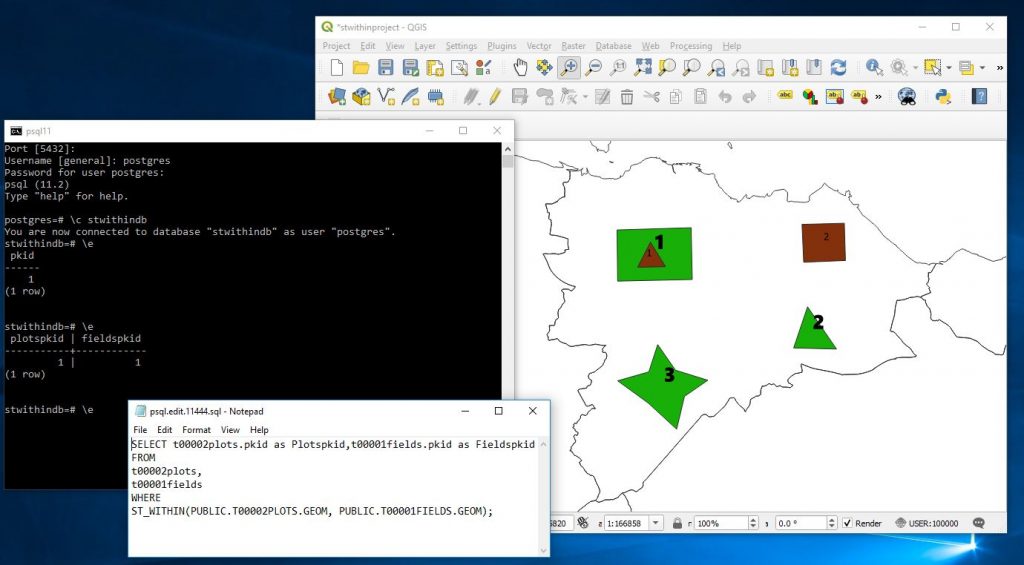

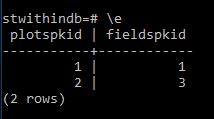

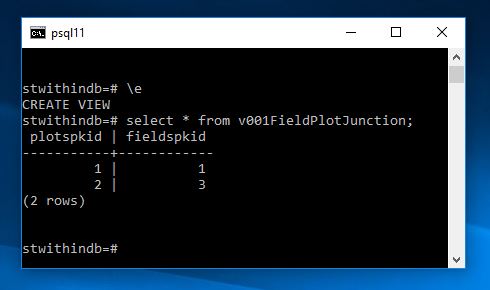

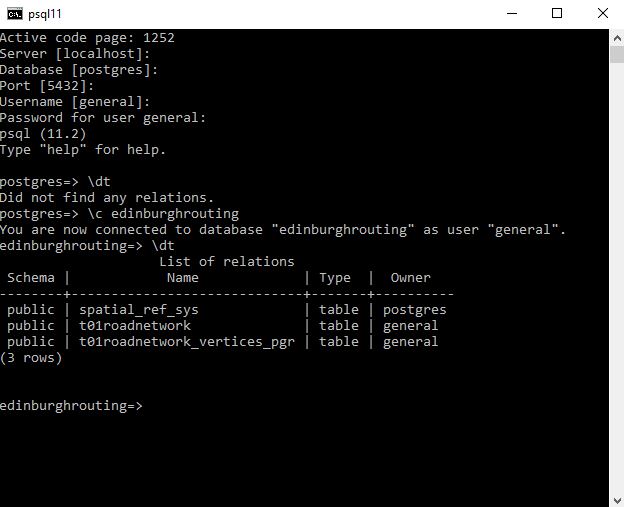

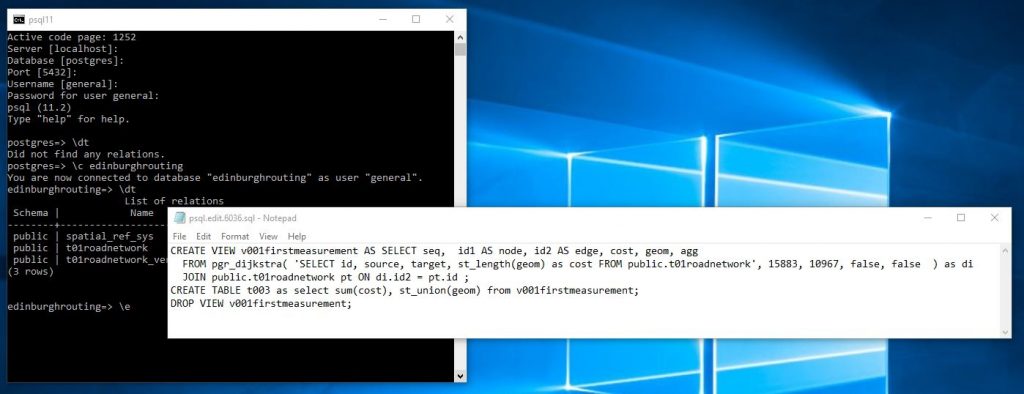

Give both mapping documents to the ETL people to allow them to start writing the queries. It is unlikely that there will be a straight transfer across from table to table. While it would be expected that field and table names will be completely different it will be expected that table structure will in certain places be different in this respect it would be good to have a really nice schema diagram of both source and target.

Step 14

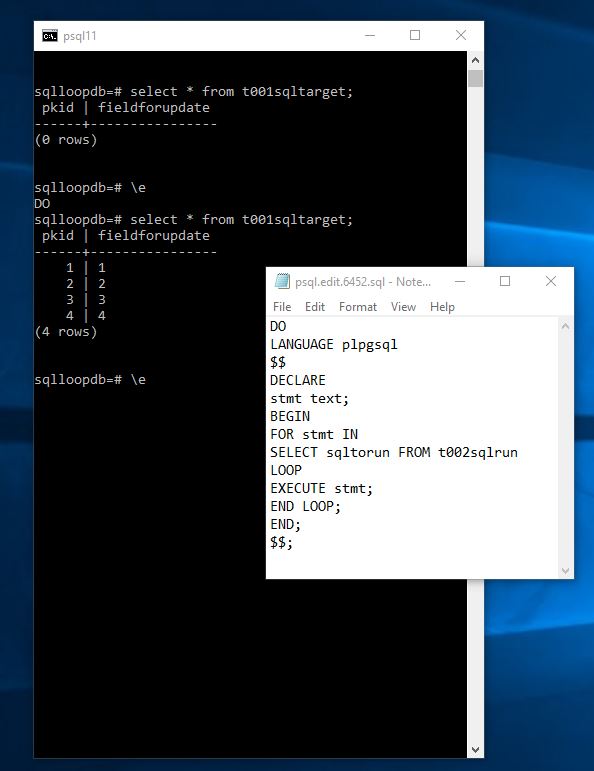

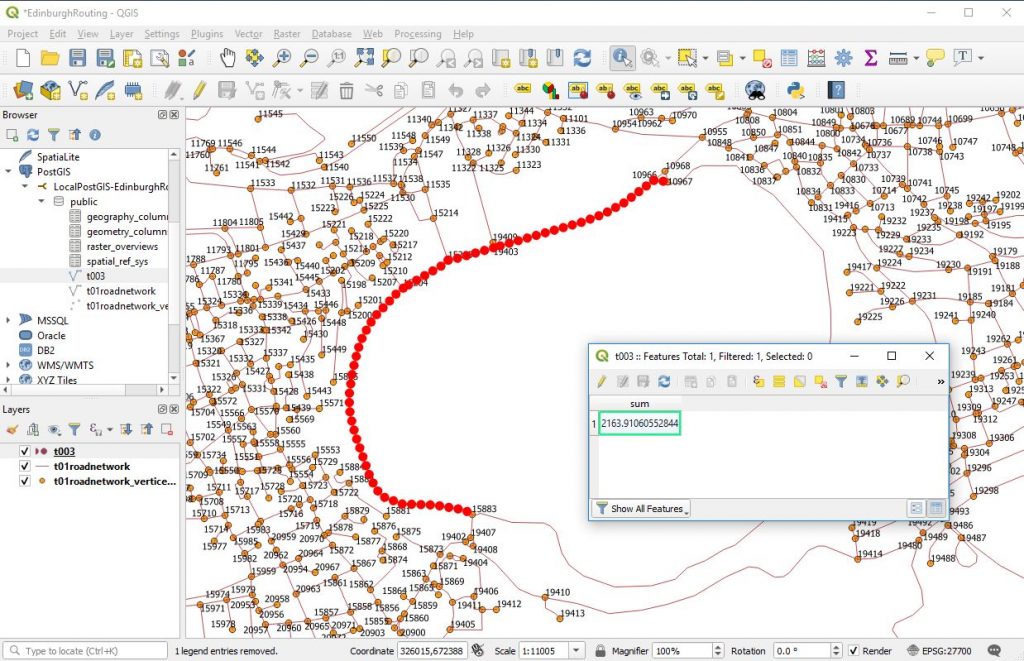

Allow data individuals to write scripts that can be run live against present initial system – if necessary doesn’t need to be live live could copy every night and then perform on 1 day old database backend – which is what we did. This means work can go on in old system and then at a touch of a button.

Encourage DBAs to be able to run these scripts every day to ensure that running them for go live is absolutely no issue. Our scripts only took about half an hour to run so this wasn’t an issue. I was personally involved in writing the SQL for those and I had systems in place to cross tab the amount coming into each new table so I could see new records and information from the old system trickling manually into the system and then being transferred.

Step 15

Test data input into new system

Step 16

Check test data input into new system with reference to domain users.

Step 17

Confirm go live date ensure staff available for issues

Step 18

Go live to production and start all new procedures ensure staff technical and domain key players on hand to make flexible solutions to things

Step 19

Project review on going maintenance and improvement of new system

Step 20

After suitable time turn off of old system if possible.